A Better road to AI for Mental Health

Are Companies Building Mental Health Tools for Healing or Hooks for Engagement?

Your Neuralink alert flashes red: 'Elevated cortisol detected. Initializing mood stabilization.' Within seconds, your anxiety about the upcoming presentation melts away - not because you've processed it, but because an algorithm decided you shouldn't feel it. The moment your neurochemistry shifts, the chip releases its perfect cocktail of molecules to restore balance. This could be mental health in 2050, a time when emotional regulation isn't a skill to learn, but rather a switch to flip.

But before you dismiss this as science fiction, consider how quickly we're normalizing AI in our emotional lives. The other day, my mom didn't pick up her phone. Usually, I'd wait and try again later, but I needed advice about something that was bothering me. So, I did what any person might do in 2025. I opened my AI chatbot of choice and started typing. As I waited for its response, it hit me: when did getting life advice from an AI start feeling so... normal?

Would you believe that 32% of people say they'd be open to using AI instead of a human therapist? In India, that number jumps to 51%, while in the US and France, it's around 24%. People are drawn to these tools for reasons you may not expect—no fear of judgment, 24/7 availability, and complete privacy. I can certainly resonate with some of these reasons myself.

Companies are racing to meet this demand: Woebot delivers CBT-based therapy through adaptive chatbots, Talkspace and BetterHelp use AI to match patients with therapists, and Wysa combines AI with evidence-based techniques to provide accessible mental wellness support, including an AI chatbot that acts as an accountability companion.i As one user of Wysa put it, "It offers additional support and can be especially helpful on a day-to-day basis, complementing the work done in therapy." A crucial distinction: these tools are becoming digital mental health companions, not replacements for human connection. But their importance is increasing by the day, so what’s in store for the future?

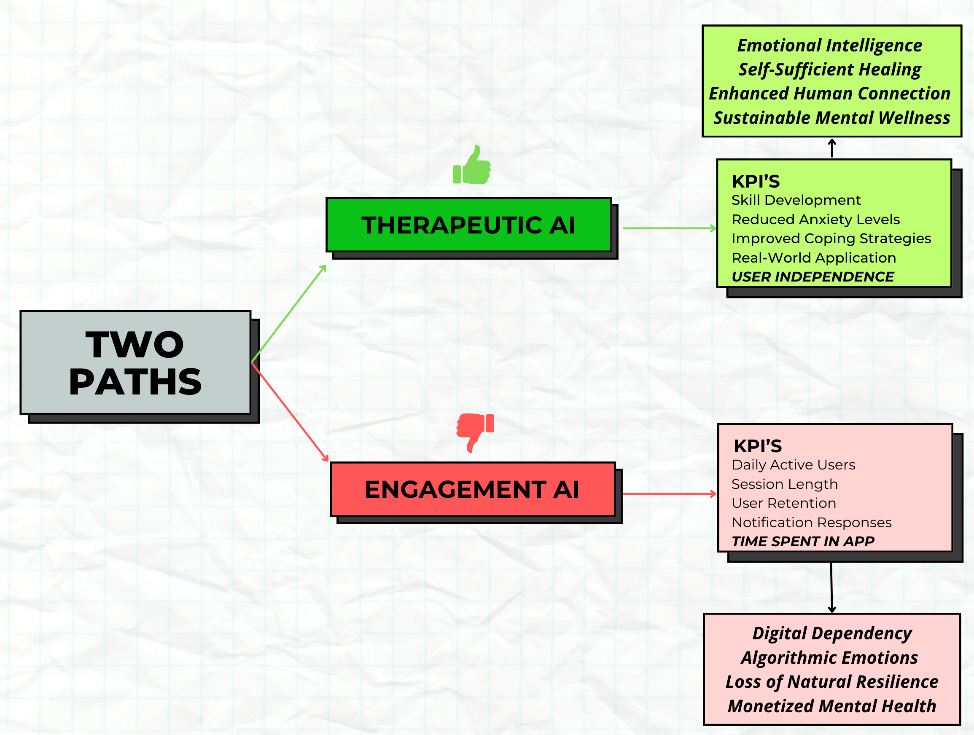

While these companies all promise to improve mental health, their approaches could lead us down radically different paths (see figure below)

Imagine two versions of the future: In one, Engagement AI follows the social media playbook—optimized to keep you hooked, feeding off your emotional vulnerability, turning your mental health into another endless scroll. In the other, Therapeutic AI takes the path of actual healing—focusing on building real coping skills and emotional strength, prioritizing your growth over app engagement.

This isn't just theoretical; it's happening right now in how mental health apps are funded and built. Take something common, like anxiety about a job interview. Today's engagement-focused apps might send you hourly affirmations (You've got this!), suggest endless meditation sessions (Feeling anxious? Try another 10-minute breathing exercise!), or constantly check on how you're feeling (Rate your anxiety level right now!). Each notification designed to pull you back into the app. Every interaction becomes another data point for user engagement, not your actual progress.

Why? Because investors want to see "engagement metrics" - daily active users, session length, retention rates. Why teach someone to process anxiety when you can keep them coming back for quick fixes? Why help someone develop emotional resilience when you can create dependency on your platform? The algorithms learn to hook into our vulnerabilities, not heal them.

If we want to avoid that future, Therapeutic AI may be the better solution. It would guide you through understanding your interview anxiety, help you prepare effectively, and teach you coping strategies you can use anywhere, anytime. The goal isn't to have you constantly checking the app for comfort, but to help you build genuine confidence and capability, as a human therapist would. Instead of maximizing time spent in the app, the therapeutic approach maximizes your growth outside of it, through reduced anxiety levels, improved coping skills, and increased emotional awareness.

The challenge?

This therapeutic approach might not look as good on an investor pitch deck. From accelerators to growth metrics to exit strategies - everything pushes companies toward engagement-based models. Even well-meaning founders who want to build genuine therapeutic tools face pressure to adopt "sticky" features just to survive in a market that prioritizes user retention over user recovery.

Here's where we need to pause and think: Let’s say we can regulate every emotional dip and spike. Are we still truly experiencing human emotion? If an AI can prevent you from ever feeling anxious about that job interview or heartbroken over that relationship…should it?

Imagine if we could shift this paradigm by 2030. AI mental health tools could be evaluated not by daily active users but by genuinely improved lives. The most valuable companies aren't those that keep users perpetually engaged, but those that help people become more emotionally resilient and independent. The technology exists, and the user base for AI-assisted mental health is already growing; we just need to reimagine what success looks like.

There's something fundamentally human about riding the waves of our emotions, even the difficult ones. The future of mental health doesn’t have to be about eliminating the emotional pain of being human but about having better tools to understand and navigate it.

What do you think? Should AI in mental health be designed to keep us engaged, or to help us grow?

-Pranav Varanasi | Healthcare AI Consultant, Deloitte Consulting LLP

-Abhishek Bhagavatula | Senior Manager, Deloitte Consulting LLP

Interesting read Pranav - thanks for sharing.

Few questions:

1. How do we assess a good outcome? Is it if they stop using a therapeutic AI app in general?

2. I’ve always hesitated when replacing in person interactions (especially emotional support ones) with AI - but maybe an AI therapist is able to be there when a human isn’t? And the barrier to entry is much lower? Is there a way we can use therapeutic AI to bridge the gap & make in person therapy more appealing?